LI.FI has announced a $29M Series A extension, led by Multicoin and CoinFund. Read Here.

The State of Interop (2025)

TLDR: without interop, crypto fails; interop/acc.

Prelude

Interop protocols are the unsung heroes of the crypto ecosystem. Over the years, they’ve quietly built the infrastructure that underpins many of the most exciting developments in the space. What started as a niche problem — connecting isolated blockchains — has blossomed into one of the most important areas of innovation in all of crypto.

This article is a snapshot of where the interop ecosystem stands now. It’s a guide to understanding how interop is powering the most important narratives in crypto, and why it matters more than ever. We take a look at how far interoperability has come, what’s new and noteworthy, and what trends are emerging that will shape the future.

Key takeaways:

Bridging is faster, cheaper, and more secure than ever.

Interop is the bedrock that powers everything, from token issuance to intents to chain abstraction.

Modular interop protocols with customizable verification may be the endgame.

The future is tokenized onchain assets and interop token standards will play a huge role.

Every ecosystem is putting interop first. Those that don’t, struggle to gain early traction and unlock liquidity flows.

Any new chain launching today has interop baked in from the start — whether that’s liquidity bridging (intents, pools, aggregators), canonical asset issuance (interop token standards), or message passing for wider connectivity.

For users, developers, and investors, interop is where the action is.

Let’s dive in!

Bridging Is Now Insanely Cheap And Fast — Intents Are Taking Over

It’s trendy on Twitter to bash bridges. Everyone’s doing it, so it must be true, right? But that take is stale.

Sure, bridges did suck — back in the day. Two years ago, using a bridge felt like playing an endless game of Temple Run, dodging obstacles, madly clicking the screen and praying your funds didn’t vanish into the wormhole (pun intended). If you had a bad experience back then, I get it. You have every reason to hate bridges.

But the bridges of today aren’t the bridges of two years ago. They’re faster, cheaper, more secure, and way less painful. Intent-based design has quietly changed the game when it comes to user experience on small transfers, while pool-and-message-based networks have driven the cost of large transfers down dramatically.

The brutal truth is that users care about two things — speed and cost. Align the incentives with those priorities, and you get better bridges. The intent-based model nails it:

You send funds on Chain A.

Solver sends what you need on Chain B.

Pay a small fee to the solvers and you’re done.

A verification protocol (oracle / native or third-party interop) ensures that all the above steps have been completed correctly.

Everyone wins.

This process is simple and fast. Consequently, standards are higher now across the board. Nobody wants to wait minutes (or even seconds) longer than necessary. That competitive pressure has forced bridges to level up, and the users are winning as a result.

The data backs this up. Over the last 30 days or so:

Across fills about 30% of volume in under 15 seconds (interestingly they also generate the most fees relative to size), 20% in 15–60 seconds, and 50% above a minute.

Stargate handles most of its volume in the 30–60 second range.

That’s fast. And remember, Stargate isn’t even an intent-based bridge, which goes to show how much we’ve improved in terms of speed in general (read more about Stargate V2 to see why it’s so cool).

If you’re still skeptical, try these super-fast bridges yourself: Relay, Gas.zip, Mayan. Once you experience them, you won’t go back to the old narratives about bridges.

The common thread among these bridges? They’re all intent-based by design.

Every new bridge in the market today is going down the intents path. It’s rare to see a design philosophy adopted so quickly from both a development and user perspective. Almost everyone loves intents – users appreciate the speed, developers like the design philosophy, and even skeptics acknowledge their benefits (though, like anything, they come with trade-offs of their own).

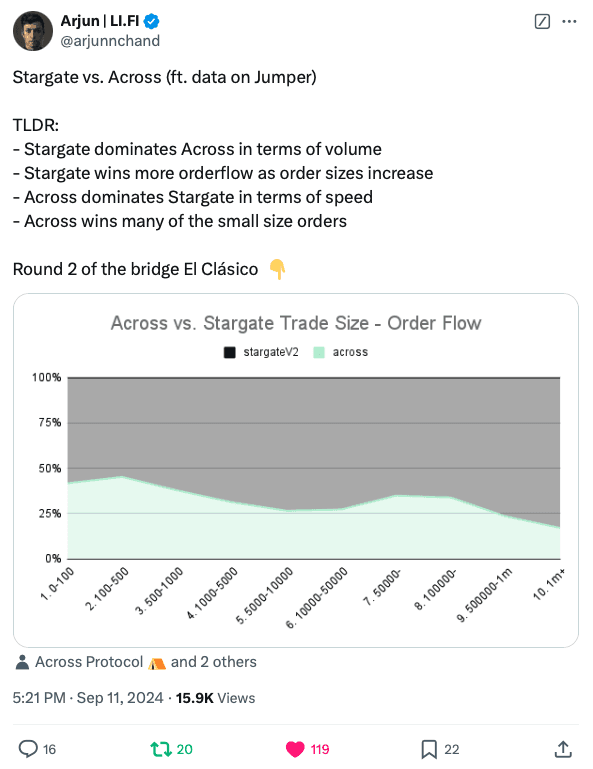

Although, it must be noted, for large value orders, bridges like Stragate, Circle CCTP, and native bridges continue to provide the best rates comparatively.

Source: Arjun on Twitter

While intents have captured much of the mindshare and reshaped public perception around bridging, innovation didn’t stop there. For large value orders, bridges like Stargate have pushed capital efficiency to new heights, while solutions like Circle CCTP, already renowned for handling size, are now receiving upgrades that make them faster than ever.

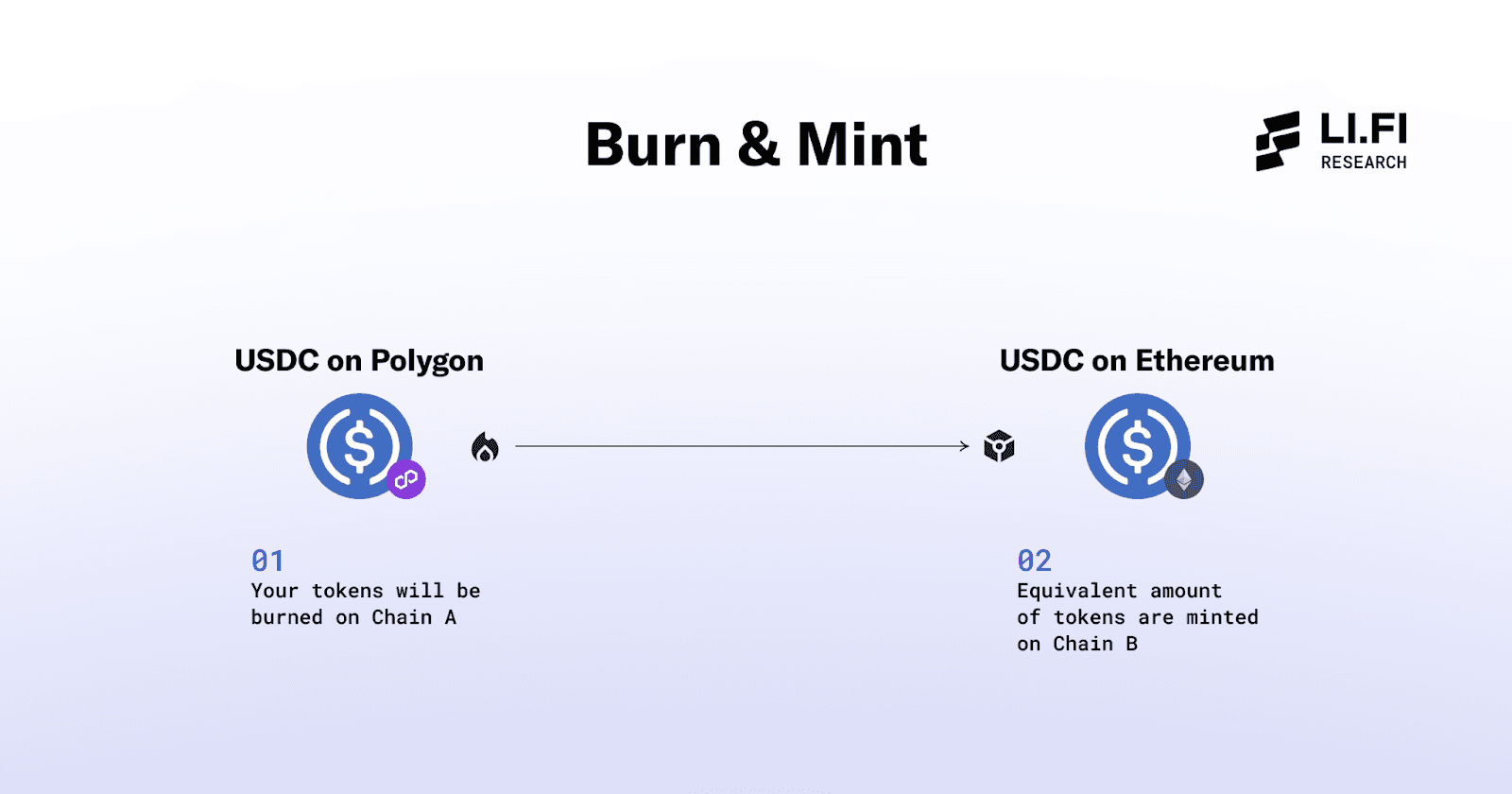

Additionally, interop token standards (like OFT, NTT, ITS, and Warp Token) remain equally important innovations. They deliver unparalleled capital efficiency for moving size across chains with zero slippage, simply by burning and minting tokens on different chains. I expect these token standards to play an even more central role in the bridging landscape moving forward.

This diversity of approaches underscores a simple truth: it’s good to have multiple ways to solve the same problem. Intents have made user experience smoother, reducing friction and stress for the users and making it super-fast and cheap to move small amounts across chains.

Meanwhile, pool and message-based bridges like Stargate (or Circle CCTP, which uses a message-based burn-and-mint model) and interop token standards are important for enabling high value transfers in a capital efficient manner.

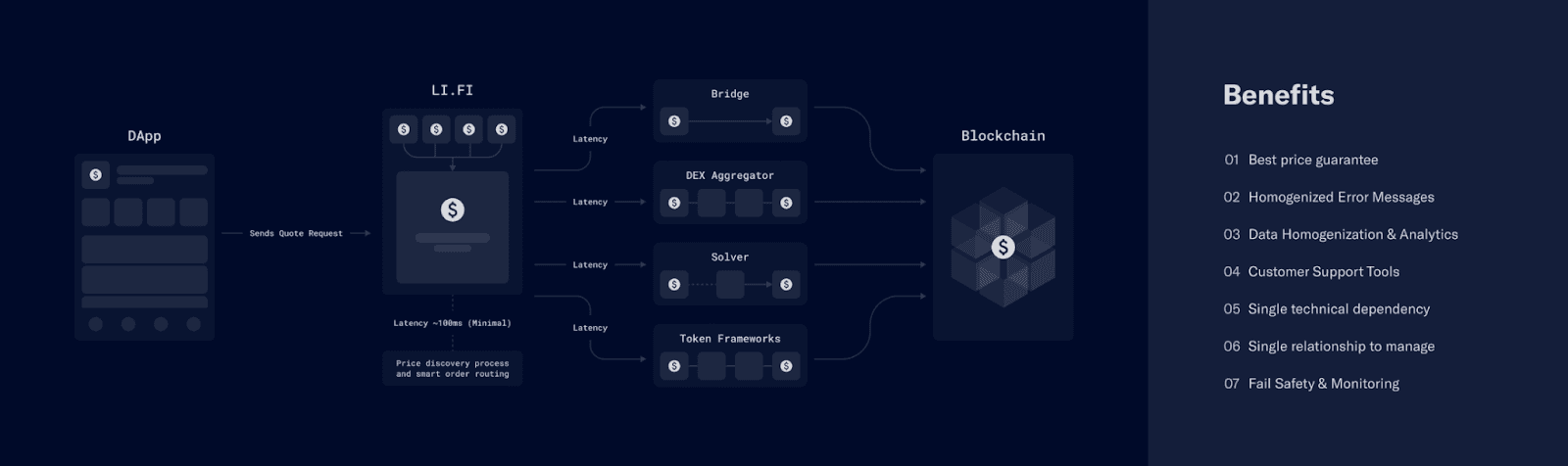

Each approach serves its purpose, reinforcing the broader ecosystem and making the case for aggregation (LI.FI).

Aggregation via LI.FI

The Emergence of SolverFi

The ripple effects of intents don’t stop at making bridging cheap and fast. With intents, it’s solvers all the way down, and solvers are now becoming an ecosystem of their own. Enter SolverFi: an emerging field where teams are building solver infrastructure:

Platforms for solver collaboration (Khalani)

Tools to simplify solver operations (Everclear, Nomial, Tycho)

While still in the early stages (with most of the platforms above either newly live on mainnet or yet to launch) the groundwork for solver infrastructure is being laid, setting the stage for what could become one of the most interesting fields in crypto.

Eventually, we’ll see solvers-as-a-service become its own platform. Someone will crack the code for chains and apps to easily integrate solver networks and individual solvers, aligning incentives across all participants.

Flip The Script, Abstract The Chains?

Intent-based design is the kernel that has birthed the bag of popcorn called chain abstraction – aka building an application that interacts with multiple chains while feeling like a single, interconnected “crypto” experience. ~ With Intents, It’s Solvers All The Way Down (LI.FI)

This shift wasn’t possible until bridges became fast and cheap. The speed and cost we now take for granted serve as the foundation for many chain abstraction protocols. Add in messaging bridges and other interoperability tools, and you get an ecosystem where multi-chain interactions start to feel, well… abstracted. It’s satisfying to see how these previously disconnected pieces have clicked together.

Source: Kram on Twitter

Chain abstraction started picking up steam toward the end of 2023. Connext (now Everclear) was first to use the term, showcasing a restaking flow where users could deposit funds from any L2 without needing to worry about which chain they were on or which asset they needed.

Since then, chain abstraction quickly grew into one of the dominant narratives of 2024, and for good reason: at the end of the day, we’re all here to improve the experience of using crypto.

In early 2024, Frontier Research introduced the CAKE Framework — a way to understand the chain abstraction stack. Since then, the space has exploded with activity. Today, dozens of projects are exploring what can be built on interop rails to abstract the chains, and it’s fascinating to watch it all unfold in real-time.

These protocols are built on emerging important concepts: account abstraction, smart accounts, intents, solvers, unified balances, resource locks — the list goes on. Each of these ingredients contributes to a smoother, faster, and more intuitive crypto experience for both developers and users. Some notable projects include OneBalance, Rhinestone, Biconomy (acquired Klaster), and others.

Source: The Rollup on Twitter

Chain abstraction protocols are pushing forward some much-needed UX improvements in crypto. It’s the kind of progress that probably would’ve happened eventually, but these protocols are accelerating the timeline. I’m really excited to see what the chain abstraction space cooks in the next year and it’s good to see that we’re already seeing some products live as well.

Looking ahead, two battles are emerging: one at the app level, and one at the infra level.

At the app level, the race is about distribution and user adoption. Will chain abstraction fundamentally change how people trade, bridge, and interact with apps? Or will this all amount to incremental improvements that gradually become standard across all interfaces. Only time will tell. The good thing is that we’re already seeing the first launches of apps and wallets in the chain abstraction paradigm — Infinex, UniversalX (by Particle Network)and Arcana Wallet are a few examples.

However, there’s still a significant onboarding hurdle for these apps: users must deposit funds into a new account, which varies for each chain-abstracted app. This is an inherent limitation of ERC-4337: Account Abstraction. But, this will be overcome when EIP-7702 goes live, giving Smart Account like functionality to EOA accounts.

At the infra level, chain abstraction protocols face a challenge similar to earlier interop protocols. They need to convince developers to think in terms of a multi-chain world rather than being anchored to one specific chain or ecosystem. How will they differentiate themselves from legacy interop protocols? How will they compete, or even collaborate, with them? These are some things worth keeping a close eye on.

Overall, I think today there are very few protocols in this category that are truly doing something novel and bringing fresh ideas to the table. Many existing interoperability protocols are already well-positioned to deliver some of the same benefits that chain abstraction infrastructure aims to unlock and they’re dedicating significant resources to build “full stack interoperability” solutions.

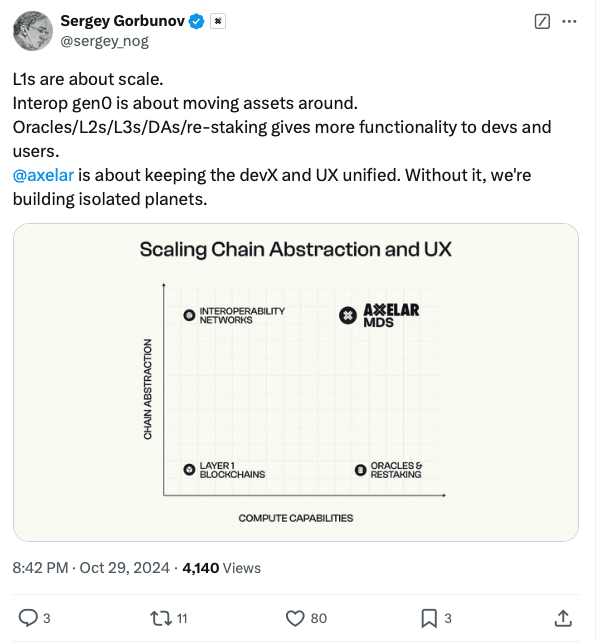

In fact, we’re already seeing a strategic shift in comms from players like Axelar with their Mobius Development Kit, which emphasizes scaling chain abstraction and improving UX. I believe every interoperability protocol has the potential to play in this space, so it’ll be interesting to see how dedicated chain abstraction infrastructure protocols differentiate themselves, compete, and attract developers.

Source: Sergey on Twitter

Moreover, a new school of thought is emerging in interoperability with players like Polymer. Instead of relying on traditional messaging between chains, they’re betting on proofs as the foundation for interoperability. This approach is seeing some early adoption, particularly among teams aligned with the "chain abstraction" vision.

In short, chain abstraction is still early, but there’s big potential. Whether it becomes a fundamental shift in how crypto works or simply a clever optimization remains to be seen. But either way, the space is moving fast — and it’s hard not to be excited about what comes next.

For a deeper dive into chain abstraction, I recommend checking out Chain Abstraction in 2024: A Year in Review by Omni, Particle, and LI.FI.

Modular Interop Protocols With Customizable Verification (The Endgame?)

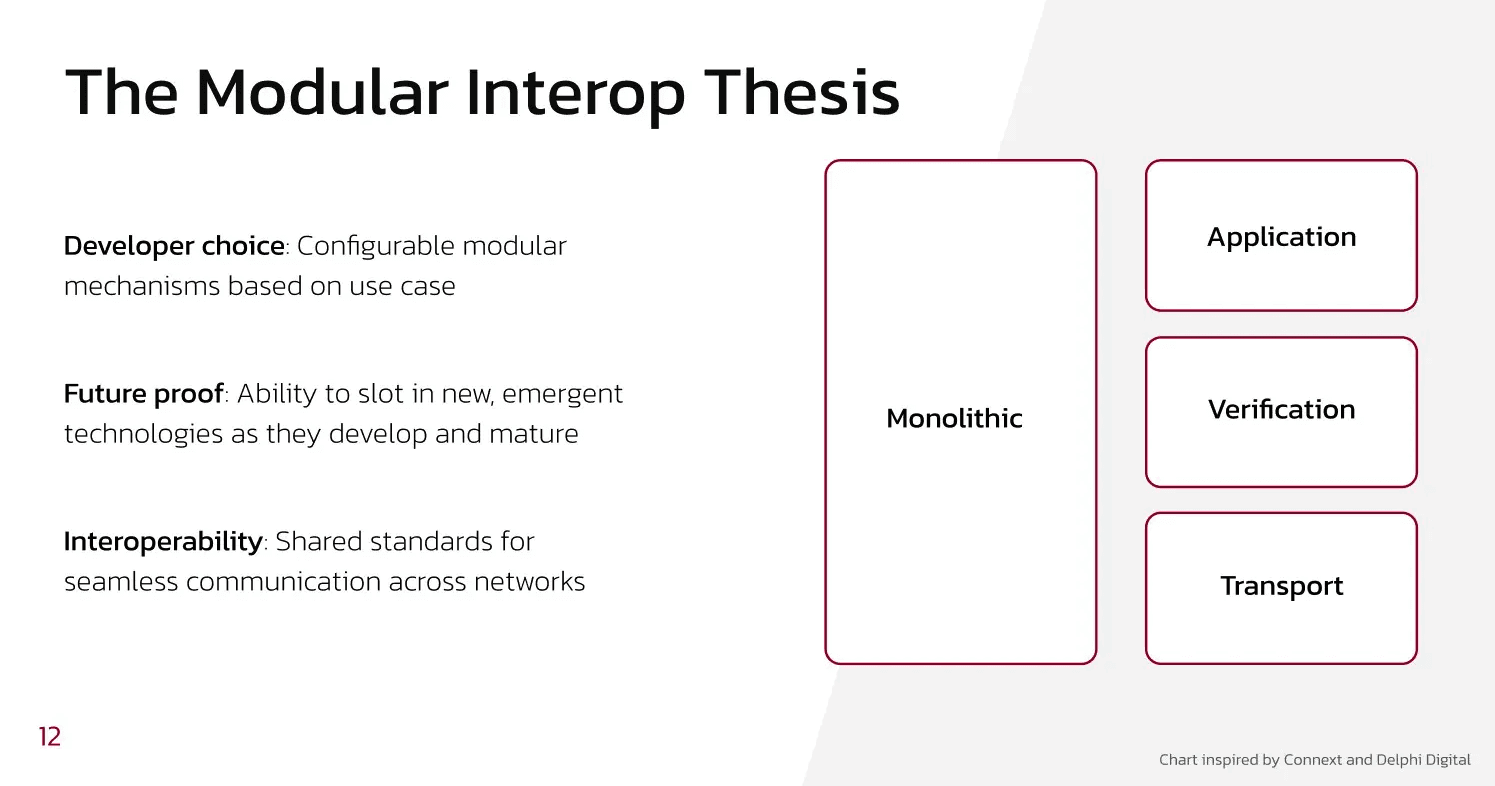

The interop protocol stack of today looks vastly different from what we had just two years ago. Like every other part of the crypto ecosystem, interop protocols have modularized.

In simple terms: early interop stacks were monolithic — fewer components, each burdened with multiple responsibilities. Today, the stack is specialized. Each layer has a specific role, excels at that role, and operates largely independently of the others. This modular approach has significantly improved both the security and functionality of interop protocols.

In fact, the modularization of the interop stack has created some of the most prominent use cases at the application layer, most notably in the form of intents and intent-based applications:

Application – interpreting users' intents and executing them via solvers.

Verification – ensuring the validity and accuracy of the intents being filled.

Transport – moving data related to the intent fulfilment across chains.

Editor’s note: This should also settle the "intents vs. messaging" debate. Intents are simply the application layer for interop (whether through messaging or other means) — they represent the interface users interact with. Both approaches complement each other, addressing different aspects of the same problem.

The benefits of a modular interop stack. Source: Modular Interop Protocols (0xjim)

One of the most significant advantages of this modularization is security. Security has always been the Achilles' heel of interoperability. High-profile hacks gave interop protocols a bad reputation. Modularising the verification layer empowers app teams to optimize verification based on their specific needs — whether it's security, cost, speed, or any other critical parameter.

A clear signal of the market’s need to use multiple verification solutions simultaneously emerged in the Lido DAO governance forum. When choosing an interop provider for wstETH, the DAO couldn’t settle on a single protocol. Ultimately, they opted for a multi-message aggregation model, selecting Wormhole and Axelar to share verification responsibilities.

Today, every major interop protocol offers customizable verification options:

LayerZero V2 – Decentralized Verifier Networks (DVNs)

Hyperlane – Interchain Security Modules (ISMs)

Axelar – Customizable verification schemes via Amplifier

Wormhole – Flexible verification options beyond the Guardian network when using the NTT framework

Hashi & Glacis – Enable multi-verification setups across interop providers

Verification Marketplaces

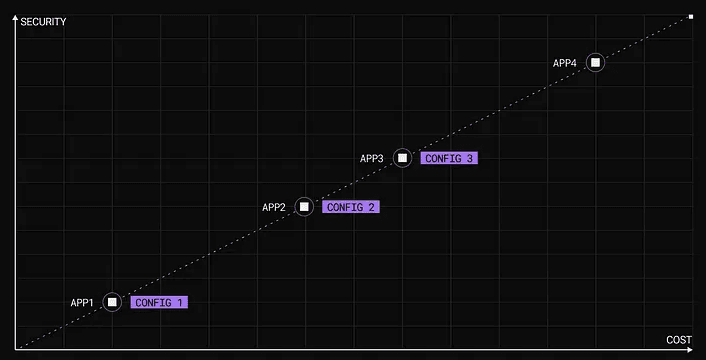

The ability to choose providers turns the verification part of the interop stacks into a marketplace effectively, where different providers can specialize in specific trade-offs and serve distinct use cases based on what they’re optimizing for between security and cost.

For example:

A high-frequency game requiring thousands of messages per minute prioritizes low fees and speed over maximum security – a simple oracle for verification would be sufficient.

A DAO sending a monthly governance message focuses exclusively on maximum security, with no concern for speed or cost – a provider like Hashi or Glacis that plugs into multiple verification providers to offer high security would make sense here.

Source: LayerZero V2 Deep Dive (Kram)

A new wave of verification-specialized teams is emerging. SEDA, Nodekit, Polymer are notable examples, and more are surely on the horizon. Additionally, experimental approaches to crypto-economic security — like those seen with Eigenlayer, Symbiotic, Canary — are among the most exciting trends in the space today.

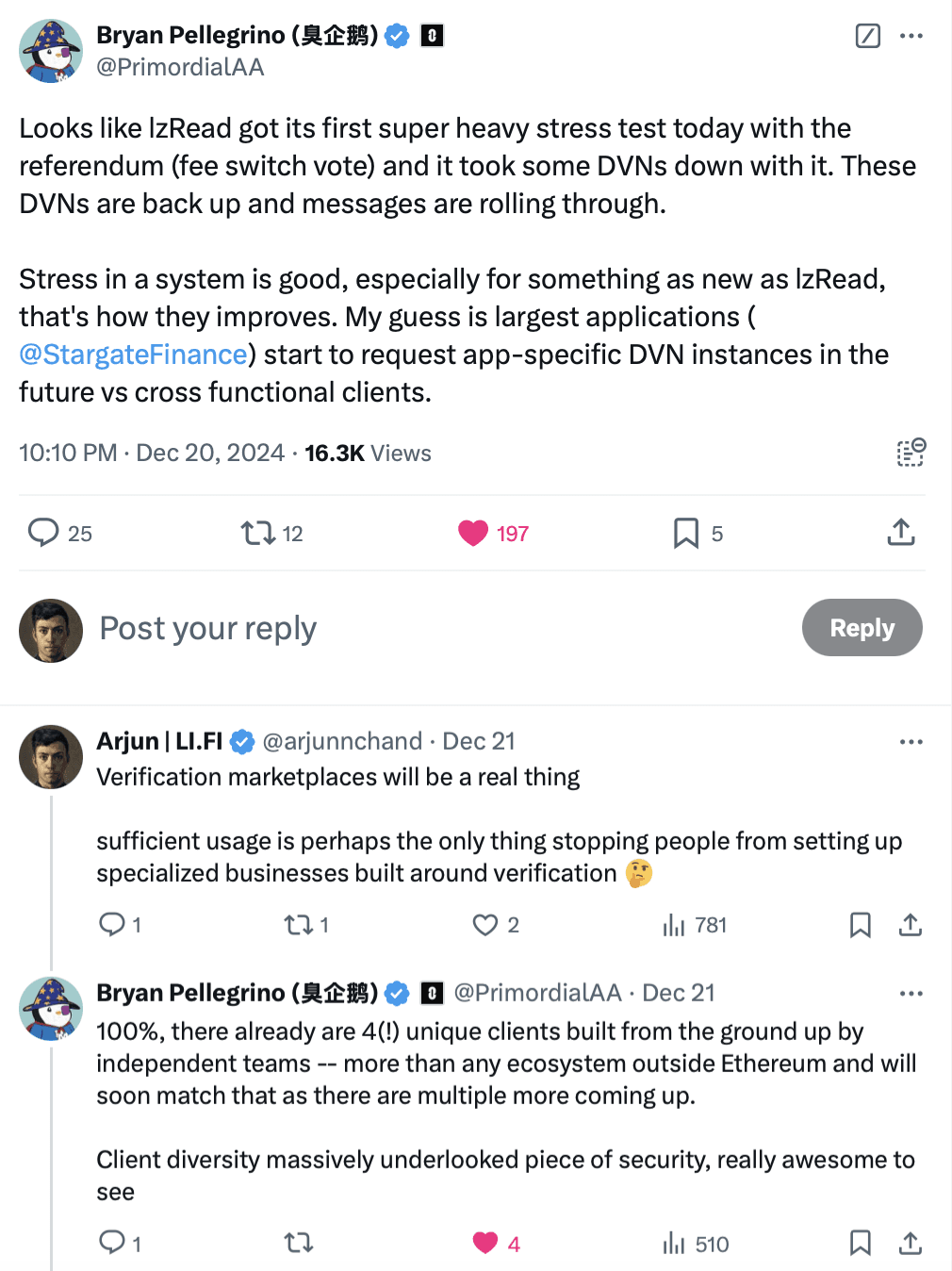

The only reason we aren’t seeing more providers build specialized verification solutions to serve interop protocols is because messaging volumes haven’t hit critical mass yet and the cost of running these systems at scale is still high. For most players, the revenue opportunity isn’t compelling unless they’re handling a substantial share of messages across multiple protocols. Yet, there’s counterexamples like Nethermind and Polyhedra who have shown that building and scaling these systems is not only possible, but also profitable if done right (both run DVNs for LayerZero V2).

Moreover, adoption is accelerating. As the number of chains grows and more teams build on interop protocols, messaging volume will inevitably rise and there’ll be a need for app-specific verification systems.

We’ve already seen hints of adoption reaching critical mass:

During LayerZero’s fee switch vote, the Nethermind DVN was stress-tested under high message volumes, resulting in outages. Apps relying on that specific DVN, like Stargate, also experienced downtime.

This mirrors the “noisy neighbor” problem for apps on blockchains, but in this case, the congestion happens in the verification layer of interop protocols, where one app’s high usage can consume resources and affect others.

The takeaway? Apps need their own DVNs or verification modules to avoid being affected by congestion from other applications.

When that future arrives, we can finally leave behind the narrative of interop protocols being “just multi-sigs” (most verification today still relies on multi-sigs), and move closer towards the endgame state of interop protocol stacks that are scalable and secure by design.

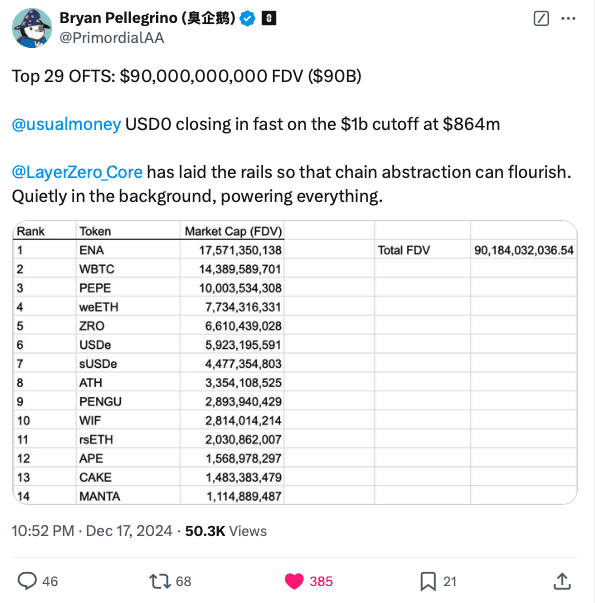

Source: Bryan on Twitter

Plus, these verification systems aren’t just limited to checking cross-chain messages. They can also handle data primitives like LzRead from LayerZero and Wormhole Queries from Wormhole, among others. These primitives sort of turn interop protocols into internet protocols, transitioning the role of a verifier from “attenstation” (did xyz thing occur?) to “computation” (grab xyz thing and format a result). Which means their potential market is much bigger than just messaging. And whenever you see a big new market like this, you can expect specialized players to start emerging.

Tokenize Everything With Interop Token Standards

Interop token standards are arguably the most polarizing innovation in the interop space — people either love them or hate them (though, if we’re being honest, in a parallel universe, they would probably be launching their own seeing the adoption), and both sides have valid points.

The supporters appreciate the simplicity: burn and mint a token on any chain, and you’re done. There’s no slippage, relatively fast speeds (as fast as chain finality allows), and significant benefits for token issuers, especially when it comes to managing and accounting for token supply across multiple chains.

Source: Comparing Token Frameworks (LI.FI)

On the other hand, critics raise a valid concern about third-party dependence. For example,. In the past, incidents like the Multichain (previously Anyswap) hack set a bad precedent and is often used as an example to highlight everything that can go wrong because of interop protocols.

But, things have changed a lot since that event a few years ago. The security of interop protocols has genuinely improved, making it less likely we’ll see a repeat of such events – the absence of major hacks in 2024 serves as a testament to the progress made.

Moreover, it’s important to recognize that Multichain represents an earlier version of interop protocols, which were less modular and more centralized. Today’s interop protocols are built as open, modular frameworks, offering much more flexibility, customization, and security for token issuers.

Teams, sentiment, and technology around interop have matured, and auditors have gained a deeper understanding of potential vulnerabilities and what can go wrong. We’ve learned from our mistakes and each standard provides ways to ensure the ownership of tokens is in the hands of the issuers and in the worst case scenario if something does go wrong, the collateral damage can be reduced through measures like rate limits.

Ultimately, you can choose which side you fall on, and this resource — Comparing Token Frameworks (LI.FI) — can help you navigate the various token standards and understand their differences.

But here’s the thing: the numbers don’t lie. Interop token standards are seeing massive adoption, and 2024 has already seen an acceleration that suggests 2025 is going to be an insane year where these standards see even more massive adoption.

All major interop players have rolled out their own product for interop token standards:

LayerZero – Omnichain Fungible Token (OFT) standard + Stargate’s Hydra, offering bridging-as-a-service for chains to use OFTs as canonical tokens

Wormhole – Native Token Transfers (NTT)

Axelar – Interchain Token Service (ITS)

Hyperlane – Warp Tokens

Chainlink – Cross-Chain Token (CCT) standard

Glacis – provides access to any interop token standards and gives issuers the flexibility to manage risk across multiple interop protocols with its support for the xERC20 standard.

SuperchainERC20 – an implementation of ERC-7802: Crosschain Token Interface (similar to xERC20 in many ways) to facilitate the burn-and-mint mechanism for tokens across the Superchain.

The adoption of these token standards has been significant, with institutions like PayPal and BlackRock, bluechip crypto protocols like Sky (formerly MakerDAO), memecoins like WIF, high value assets like wrapped Bitcoin, global stablecoins, CBDCs, and tokenized US treasuries all adopting these standards.

Together, the value secured across these token standards exceeds $100 billion (when you factor in the FDV of tokens issued on top of them), and the growth is only going to accelerate from here. As more tokens launch, it’s clear that these standards will become a fundamental consideration, with each new token likely adopting one interop token standard or another.

Source: Bryan on Twitter

Tokenization of assets is happening at an unprecedented rate. Alongside chains and ecosystem teams, interop protocols will play the most important role in onboarding institutions into crypto.

In the near future, every institution will have a tokenized product onchain, and interoperability will be a critical consideration from Day 1. All major interop players are collaborating closely with institutions, helping them tokenize through interop token standards and meet their needs to connect with the broader ecosystem.

With so many chains to choose from, no single chain will be enough. Gone are the days when launching a token on Ethereum was the go-to-market strategy. There are now too many chains with potential markets to tap into, and the only way to scale across them in a clean, future-proof way is with interop protocols.

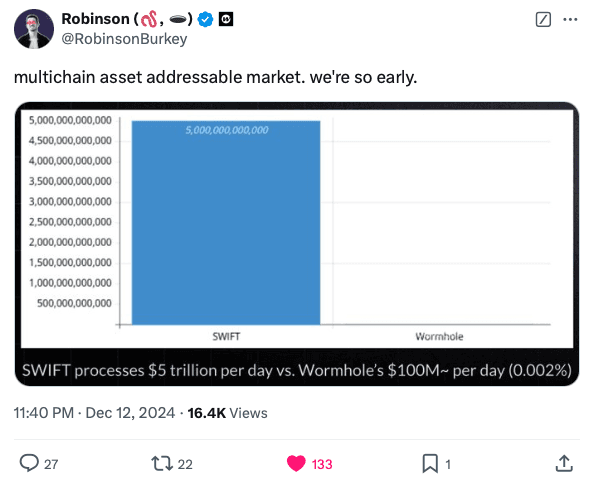

The bull case for interop token standards is, in many ways, the bull case for crypto itself — everything will eventually be tokenized onchain. Real World Assets (RWAs) could represent a $16 trillion opportunity, and with where the puck is going, these assets will be interoperable across chains, and a substantial chunk of it will be issued on interop token standards.

Source: Robinson on Twitter

Every Ecosystem Is Prioritizing Interop

Make Ethereum Unified Again

2024 turned out to be a test for the Ethereum community in every sense, marked by fragmentation in user experience and a lack of unified direction:

The broader community struggled to build applications that simplify user onboarding. (interop protocols solve this — the solutions are already out there; they just need to be utilized more effectively).

The launch of numerous new L2s and their growing adoption sparked heated debates, with some arguing that these rollups were parasitic to the L1 ecosystem.

A cultural clash emerged between the Ethereum “OGs,” who wanted to stick to the original roadmap, and newer voices pushing to scale the L1 or even abandon the rollup-centric approach.

Meanwhile, competitors like Solana steadily gained ground, with users pointing to the ease of UX as one of the key differentiators.

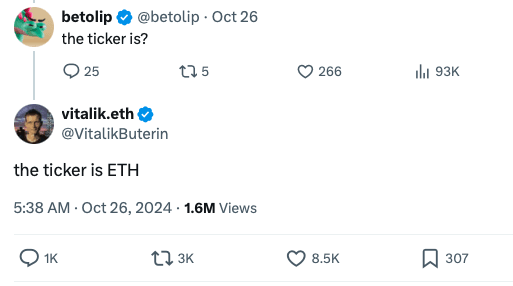

By the end of the year, all of this tension culminated in what some referred to as “wartime Vitalik” mode. Vitalik, often the calm voice of Ethereum, tweeted “the ticker is $ETH” and became increasingly vocal — through writings, panels, and podcast appearances — about the need to address Ethereum’s fragmentation challenges and unify the community’s efforts.

Source: Vitalik on Twitter

It seemed to resonate (maybe?). Standardization for interop became a major talking point for Ethereum and the community is rallying behind a broader push to expedite standards, aiming to solve ongoing UX challenges. As part of these efforts, the acceptance of ERC-7683 (still a work in progress and not yet live), aimed at unifying Ethereum through 'intents', has begun to gain traction, especially with Vitalik's support.

Perhaps 2024 will be remembered as a year of growing pains — one that forced Ethereum to take decisive action towards improving the user and developer experience.

That said, significant progress has already been made already:

The Dencun upgrade was shipped, making gas fees on L2s cheaper than ever, resulting in a noticeably better UX.

Wallets like Rabby have significantly improved the experience of using these chains.

Bridge connectivity across the ecosystem has vastly improved. Today, bridges are faster and cheaper than ever.

In-app integrations, such as MetaMask, Phantom, Rabby, Binance wallet and many others integrating LI.FI, have simplified cross-chain usage.

Additionally, several chain abstraction protocols went live to further these goals, pushing Ethereum closer to a more unified ecosystem.

Looking ahead, it feels like the day when Ethereum feels unified again is within reach, though there are still many challenges to address. It will be interesting to see how it all unfolds.

Clusters With Native Interop Solutions

One of the more interesting things happening in crypto right now is the race to build clusters of interconnected chains. It’s as if chains are realizing that being part of a network — a zone of chains connected by shared standards — is better than going solo and trying to build walled gardens. I like to think of them as special economic zones in crypto where all the chains contribute and worth together to growing the pie. You see this in projects like:

Optimism and the Superchain

ZKsync and the Elastic Chain

Polygon and the Agglayer

Arbitrum and Orbit chains

Ecosystems like Initia and Dymension

Remember Cosmos?

What’s key is making these clusters feel like a single unified system. They need to feel like they’re all part of the same chain from a user perspective. And that’s why, all the clusters are building native interop solutions. Plus, you’ve got projects like Espresso and interop protocols in general working on making the experience between clusters seamless (example – LayerZero’s OFT standard being natively supported on Arbitrum Orbit).

While none of the native interop solutions are live yet, it’s clear they’re on the horizon. The speed of chain launches across various clusters and the growing number of interop features being developed suggest that things are gradually moving from theory to testing, and eventually to production. A notable example is the Optimism Superchain, where interoperability is central to the value proposition, and they’re making tangible progress toward realizing it as well as making efforts to bootstrap liquidity throughout the ecosystem through campaigns like Optimism Superfest (powered by Jumper).

Source: Vitalik on Twitter

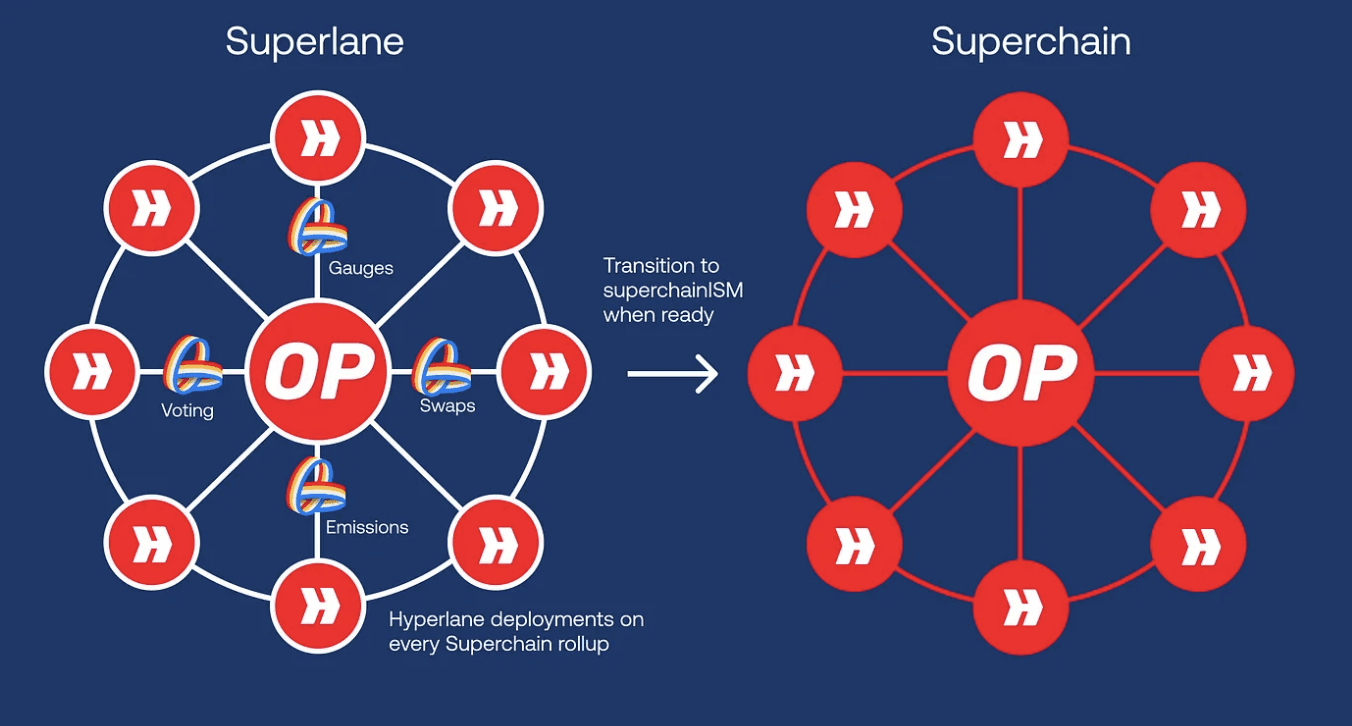

Moreover, there’s also a lot of experimentation happening while we wait for native solutions to go live. Superlane by Hyperlane and Velodrome is a good example of this.

Superlane shows how building on interoperability protocols future-proofs teams:

It lets them connect to all the chains they care about today while staying ready to expand to any new chain that launches tomorrow.

It also allows them to use the best verification mechanisms currently available, and when native interoperability solutions like Superchain (or any other verification mechanism) emerge, they can seamlessly swap them in.

Source: What is the Superchain + Why Superlane? (Hyperlane)

You Simply Cannot Ignore Solana

Solana is the preferred destination for trading today. It’s one of the most active ecosystems across every key metric — volume, developers, users, new apps.

Historically, interoperability protocols have been largely EVM-focused, with very few adding Solana routes.

However, that’s changed now. More teams have realized the market potential on Solana and have taken the leap to overcome the technical challenge of expanding to another virtual machine beyond EVM.

A growing number of bridges have now added Solana support, and this is quickly becoming a must-have for any team that hasn't already done so:

Jumper added Solana routes.

Circle CCTP expanded to Solana.

Relay supports Solana.

Rhino now supports Solana.

LayerZero is live on Solana.

Solana is now live on THORSwap.

Chainflip shipped Solana.

Hyperlane is live on Solana and is expanding across the SVM.

Bridges like Mayan and Wormhole Portal continue to see more and more usage for their Solana routes.

Editor’s note: In addition to Solana, native Bitcoin has become another key asset/route that many protocols have added to their stack. For example, native Bitcoin support is now live on Jumper, Relay, Catalyst, and Router, in addition to already established players like Thorchain and Chainflip who’ve been offering it for a while.

In many ways, Solana is speedrunning Ethereum and the EVM’s playbook, but with the benefit of hindsight, learning from Ethereum’s early UX challenges. The SVM ecosystem is slowly taking shape, with chains like Eclipse going live and many Solana apps planning to launch their own SVM chains in the future (Solana L2s?). Going forward, we’ll likely see increasing demand for interoperability in the Solana and SVM ecosystem

Interop Is Where the Action Is for Users, Developers, and Investors

TGEs and Airdrops

Interop protocols continue to be one of the most successful verticals in crypto, and the ecosystem is currently experiencing a wave of tokenization and airdrops, with billions of dollars being distributed.

Looking back, it seems inevitable that these protocols would eventually tokenize — some even mentioned it in their documentation. If I were smarter and had the knowledge I do now, I would have capitalized more on this opportunity. But, alas, let it be a lesson for the next cycle.

Here are some of the notable airdrop launches by interop protocols right now, with many reaching impressive valuations:

Wormhole’s $W airdrop

LayerZero airdropping $ZRO to 1.28 million wallets

Omni Network’s $OMNI genesis airdrop (hear their story)

Hyperlane announced their token

New Interop Protocols

Just when it seems like the interop space has saturated, a new team emerges, shaking things up and carving out their own niche in this highly competitive field.

Some of the recent notable launches:

Relay – gaining traction as one of the most popular intent-based bridges among users

Catalyst – having pivoted from an AMM design to intent-based solutions, now focusing on altVMs (hear their story)

Mach – implementing intents on LayerZero (hear their story)

Union – a settlement layer for all execution environments

Polymer – delivering real-time interoperability to Ethereum rollups (hear their story)

The continued influx of new teams and projects in the interop ecosystem signals that the vertical is only getting more diverse and exciting.

Interop/Acc

The most powerful technologies in history all fade into the background. Electricity, the internet, cloud computing — they saw real adoption when people stopped thinking about how the tech worked and started focusing on what they could build with them.

In 2025, interoperability will enter this phase. It won’t be seen as an add-on for an app or a challenge to overcome for chains — it’ll just be there, silently connecting everything in crypto. The applications people use, the transactions they make, the value they move — it’ll all run on interop rails.

Without interop, crypto fails. Until now, we’ve done the hard work of laying its foundations. In 2025, interop will accelerate.

— — —

A big thank you to Angus, Kram, Philipp, Bo, Jim, Nam (and the rest of the Hyperlane researchoor gang) along with several others for valuable input, constructive pushback, and related discussions.

FAQ: The State of Interop (2025)

Get Started With LI.FI Today

Enjoyed reading our research? To learn more about us:

- Head to our link portal at link3.to

- Read our SDK ‘quick start’ at docs.li.fi

- Subscribe to our newsletter on Substack

- Follow our Telegram Newsletter

- Follow us on X & LinkedIn

Disclaimer: This article is only meant for informational purposes. The projects mentioned in the article are our partners, but we encourage you to do your due diligence before using or buying tokens of any protocol mentioned. This is not financial advice.